Face Recognition OpenCV

Forums:

Parts Used

Raspberry Pi 4 (3b should work)

Logitech USB HD Camera

Python 2 or 3

OpenCV - Docs

The Walk

Ok, so this one was a bit more challenging... though somewhat simplistic. I am playing with the concept of recognition and all signs pointed to opencv. sudo apt-get install python-opencv Though I found quite a few articles regarding this little gem, I could not get them to work. They were all very similar so I am assuming that everyone is working from the some common script. Taking from an existing opencv python script I decided to step through it to minimally to confirm I can talk to the USB camera. Chances are I was missing some modules from their script so I wanted to break it down a piece at a time. Pulling all the juicy bits out of the code I initially came up with the following error: ....... size.width>0 && size.height>0 in function imshow It turns out to fix this error, all I needed to do was to reboot. Something had obviously hung the camera and the .size was being pulled from the incoming device... which it could not talk to. I rebooted the pi and ran the VLC video player just to make sure I was getting to the usb camera.. I was. Script Reference First Script Here is the first simple script that now works on my pi4 (better second script below):#startscript

import sys

import cv2

import os

cap = cv2.VideoCapture(0)

#make sure your xml file is truly an xml file!

faceCascade = cv2.CascadeClassifier("/home/pi/bin/opencv/haarcascade_frontalface_default.xml")

while(True):

# Capture frame-by-frame

ret, frame = cap.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces = faceCascade.detectMultiScale(

gray,

scaleFactor=1.1,

minNeighbors=5,

minSize=(30, 30)

)

print("Found {0} faces!".format(len(faces)))

for (x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

cv2.imshow('frame', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

#endscript

Success

At this point I know that opencv is installed, working with python (2.7.. have not tried 3.5 yet) and I can talk to the usb camera.

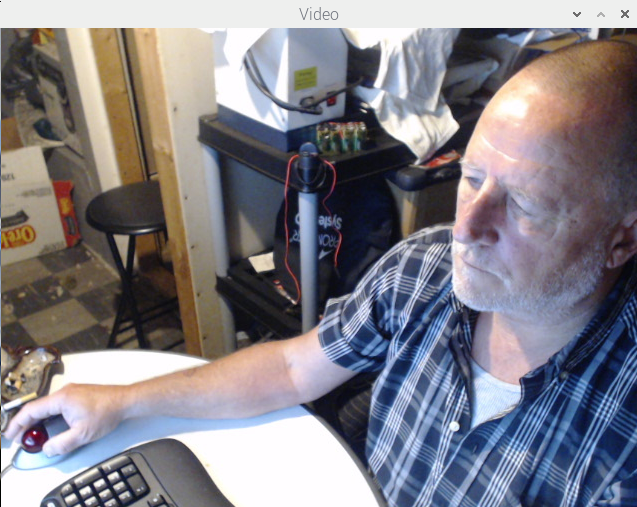

Running the script using python [scriptname] produced a pop up window with the video... Nice.

Quit At first I thought that it was expecting a Q to stop the script, which did not work. I quickly realized that the Q it was expecting was in the video window. After a few cntr-c's I realized this was the case... makes sense.

Next stop.. recognition

Now that I have the video frame working I will start adding things back in to try and get recognition to work.

This looks like it will involve cascade which appears to cycle through an xml file built to recognize pixels.

The first line I put back in "which was failing earlier" was gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

I am thinking that this is probably the pixel-part.. possibly converting to gray scale for recognition but I could be wrong?

Running the script again, it did not break anything so I am thinking that the earlier failure was due to the unavailability of the camera. Now we're cookin'.

This required putting back in the lines:

cascPath = "/home/pi/bin/opencv/face.xml"

faceCascade = cv2.CascadeClassifier(cascPath)

My assumption is that it was looking for the reference file. The first line which was originally set as an incoming parameter argument I set to a physical path. In one of my earlier searches I came across this link which fortunately gave me a cascase xml file to download on github. Chances are this will be that persons face but what the heck. I renamed it to simply face.xml and put it in the same directory... it was quite large (8MB). I have a feeling that there is some type of "training" required to generate this file which I will research next.

I also put in the cascade section of their script.. this will probably be the failure I have been waiting for.

It looks like this section performs the cascade actions, performing the detection. Error: 'module' object has no attribute 'CV_HAAR_SCALE_IMAGE'

Turns out this has changed...

Now it should be flags=cv2.CASCADE_SCALE_IMAGE

more errors.. this is why I love linux....

!empty() in function detectMultiScale

.. and 2 more hours...

Are you serious? Now normally I would not be so dumb, but it is the weekend. On further review the entire problem from the "get go" is that the stupid XML file, which I thought I had downloaded from a few different places, including github was not an xml file at all. It was html. Possible the "save as" in chromium on the pi works differently.. which normally saves the source file on the website locally.

pi@wmtp41:~/bin/opencv $ python live.py

current directory is : /home/pi/bin/opencv

Directory name is : opencv

True

I added a few lines.. one to show the dir which was what I was expecting and one to fire off the loader for cascade.

The loader was coming back with False.. of course.. it could not load a freakin non-xml file.. DOH.

After copying the actual XML in github and saving it to the correct XML file, it now responded with True.. Success

I might be missing it but you would think that GH would have a button, right there on the file page.. WHERE YOU COULD DOWNLOAD THE FILE.. That would probably be too easy.. (or I am too limited in knowledge)

Success

At this point I know that opencv is installed, working with python (2.7.. have not tried 3.5 yet) and I can talk to the usb camera.

Running the script using python [scriptname] produced a pop up window with the video... Nice.

Quit At first I thought that it was expecting a Q to stop the script, which did not work. I quickly realized that the Q it was expecting was in the video window. After a few cntr-c's I realized this was the case... makes sense.

Next stop.. recognition

Now that I have the video frame working I will start adding things back in to try and get recognition to work.

This looks like it will involve cascade which appears to cycle through an xml file built to recognize pixels.

The first line I put back in "which was failing earlier" was gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

I am thinking that this is probably the pixel-part.. possibly converting to gray scale for recognition but I could be wrong?

Running the script again, it did not break anything so I am thinking that the earlier failure was due to the unavailability of the camera. Now we're cookin'.

This required putting back in the lines:

cascPath = "/home/pi/bin/opencv/face.xml"

faceCascade = cv2.CascadeClassifier(cascPath)

My assumption is that it was looking for the reference file. The first line which was originally set as an incoming parameter argument I set to a physical path. In one of my earlier searches I came across this link which fortunately gave me a cascase xml file to download on github. Chances are this will be that persons face but what the heck. I renamed it to simply face.xml and put it in the same directory... it was quite large (8MB). I have a feeling that there is some type of "training" required to generate this file which I will research next.

I also put in the cascade section of their script.. this will probably be the failure I have been waiting for.

It looks like this section performs the cascade actions, performing the detection. Error: 'module' object has no attribute 'CV_HAAR_SCALE_IMAGE'

Turns out this has changed...

Now it should be flags=cv2.CASCADE_SCALE_IMAGE

more errors.. this is why I love linux....

!empty() in function detectMultiScale

.. and 2 more hours...

Are you serious? Now normally I would not be so dumb, but it is the weekend. On further review the entire problem from the "get go" is that the stupid XML file, which I thought I had downloaded from a few different places, including github was not an xml file at all. It was html. Possible the "save as" in chromium on the pi works differently.. which normally saves the source file on the website locally.

pi@wmtp41:~/bin/opencv $ python live.py

current directory is : /home/pi/bin/opencv

Directory name is : opencv

True

I added a few lines.. one to show the dir which was what I was expecting and one to fire off the loader for cascade.

The loader was coming back with False.. of course.. it could not load a freakin non-xml file.. DOH.

After copying the actual XML in github and saving it to the correct XML file, it now responded with True.. Success

I might be missing it but you would think that GH would have a button, right there on the file page.. WHERE YOU COULD DOWNLOAD THE FILE.. That would probably be too easy.. (or I am too limited in knowledge)

Seriously

So all of that being said.. what should have taken about 10 minutes turned into 3 days of putzing around. Some problems due to outdated code and the main problem.. XML files that were not XML files.. or downloaded incorrectly by moi.

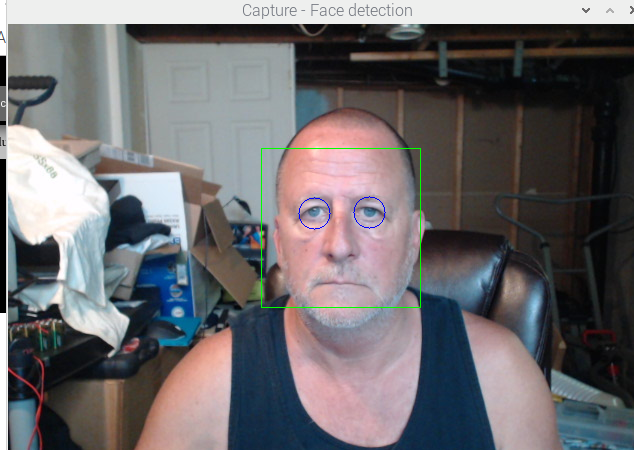

Well that covered general recognition, I see there is also an eye recognition xml file which I also downloaded using cut and paste and verified it was XML this time. This should allow picking out eyeballs within the captured object box frame.

Time for more coffee... and maybe some Advil...

So all of that being said.. what should have taken about 10 minutes turned into 3 days of putzing around. Some problems due to outdated code and the main problem.. XML files that were not XML files.. or downloaded incorrectly by moi.

Well that covered general recognition, I see there is also an eye recognition xml file which I also downloaded using cut and paste and verified it was XML this time. This should allow picking out eyeballs within the captured object box frame.

Time for more coffee... and maybe some Advil...

Code Demo Eyes & Face

Reference: OpenCV Cascade I was able to get the example for face and eye tracking to work on my Raspberry Pi 4. Now that I have been through the struggles of actually getting it to work it is getting easier. Script 2 face/eyes/output video/tracking overlay:

Script 2 face/eyes/output video/tracking overlay:

from __future__ import print_function

import cv2 as cv

import argparse

#available fourcc codecs:http://www.fourcc.org/codecs.php

#fourcc = cv.VideoWriter_fourcc(*'XVID')

fourcc = cv.VideoWriter_fourcc(*'X264')

#out = cv.VideoWriter('output.avi',fourcc, 20.0, (640,480))

out = cv.VideoWriter('output.mkv',fourcc,5.0, (640,480)) #changed from 20.0 to 5.0 - seemed smoother

def detectAndDisplay(frame):

eyeCoord=''

faceColor=(0,255,0)

eyeColor=(0,0,255)

fontColor=(255,255,255)

font=cv.FONT_HERSHEY_SIMPLEX

fontPosition = (10,10)

eyePosition = (20,15)

fontScale = .5

lineType = 2

#create a video writer

frame_gray = cv.cvtColor(frame, cv.COLOR_BGR2GRAY)

frame_gray = cv.equalizeHist(frame_gray)

#-- Detect faces

faces = face_cascade.detectMultiScale(frame_gray)

fc=0

for (x,y,w,h) in faces:

fc=fc+1

center = (x + w//2, y + h//2)

#cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

#change face to rectangle

# frame = cv.ellipse(frame, center, (w//2, h//2), 0, 0, 360, (255, 0, 255), 4)

frame = cv.rectangle(frame, (x, y), (x+w, y+h), faceColor, 1)

fontPosition=(x-20,y)

#eyePosition=(x,y+h)

faceROI = frame_gray[y:y+h,x:x+w]

#-- In each face, detect eyes

eyes = eyes_cascade.detectMultiScale(faceROI)

offset=" "

ect=0;

for (x2,y2,w2,h2) in eyes:

eye_center = (x + x2 + w2//2, y + y2 + h2//2)

e1=x + x2 + w2//2

e2=y + y2 + h2//2

eyeCoord='Eyes:{0} {1}'.format(eye_center,e1-e2)

print (eyeCoord)

radius = int(round((w2 + h2)*0.25))

frame = cv.circle(frame, eye_center, radius, eyeColor, 1)

ovl ="Tracking:{0}".format(fc)

evl = " {0}".format(eyeCoord)

cv.putText(frame,ovl,fontPosition,font,fontScale,fontColor,lineType)

cv.putText(frame,eyeCoord,eyePosition,font,fontScale,fontColor,lineType)

cv.imshow('Capture - Face detection', frame)

parser = argparse.ArgumentParser(description='Code for Cascade Classifier tutorial.')

parser.add_argument('--face_cascade', help='Path to face cascade.', default='/home/pi/bin/opencv/haarcascade_frontalface_alt.xml')

parser.add_argument('--eyes_cascade', help='Path to eyes cascade.', default='home/pi/bin/opencv/haarcascade_eye_tree_eyeglasses.xml')

parser.add_argument('--camera', help='Camera devide number.', type=int, default=0)

args = parser.parse_args()

face_cascade_name = args.face_cascade

eyes_cascade_name = args.eyes_cascade

face_cascade = cv.CascadeClassifier()

eyes_cascade = cv.CascadeClassifier()

#-- 1. Load the cascades

#if not face_cascade.load('/home/pi/bin/opencv/haarcascade_fullbody.xml'):

if not face_cascade.load('/home/pi/bin/opencv/haarcascade_frontalface_alt.xml'):

print('--(!)Error loading face cascade')

exit(0)

if not eyes_cascade.load('/home/pi/bin/opencv/haarcascade_eye_tree_eyeglasses.xml'):

print('--(!)Error loading eyes cascade')

exit(0)

camera_device = args.camera

#-- 2. Read the video stream

cap = cv.VideoCapture(camera_device)

if not cap.isOpened:

print('--(!)Error opening video capture')

exit(0)

while True:

ret, frame = cap.read()

if frame is None:

print('--(!) No captured frame -- Break!')

break

detectAndDisplay(frame)

out.write(frame)

if cv.waitKey(10) == 27:

break

To note - quitting this script requires the ESC key and not Q for quit. I did not feel like changing or adding it.

I did find however that this second iteration of the script seemed to work much better.

I was also able to detect a face from across the room.

A few changes I made was to use a rectangle for the face and ellipse for the eyes. The original came with both as ellipse. This was remarked out so you can change it back to elipse if you'd like.

The second change was to change the outline size to 1 pixel (blue). This kept it from looking so much like a clown as it was detecting. I may also change the color to both being red.. it should be as easy as changing the RGB values in the rectangle/elipse (red)

cv.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 1)

Finally, the last thing I was playing with was the eye values. You can see that they will print out during execution to the command console. My think was that somehow you could leverage these values to detect who the person is. Not really biometrics, but I was thinking more that if this persons head,eye correlations come up with the same values then it just "might" be that person.

What was also kind of cool was that holding a magazine up to the camera did recognize the faces in the magazine. To me this was pretty amazing.. though I guess I should not be too impressed.. after all it is still all just coming from an "image".

Overlay

No tracking software would be complete without some overlay text. I have added two text overlays. The first, which will follow the face rectangle around, tells how many faces it has found in the scene. The second overlay is the eye tracking mentioned above and sits in the left corner of the window. This lists the coordinates of the eyes and (eye loc - eye loc) calculation.

Overlay

No tracking software would be complete without some overlay text. I have added two text overlays. The first, which will follow the face rectangle around, tells how many faces it has found in the scene. The second overlay is the eye tracking mentioned above and sits in the left corner of the window. This lists the coordinates of the eyes and (eye loc - eye loc) calculation.

Video Output

Another addition to this script outputs the video to a file. I was thinking it would be helpful to have the output of the recording for future use. You may need to mess with the codecs to get the correct ones that work with your machine but it is working with x264. For some reason I cannot x.264 to a .mp4 file but am able to output to an .mkv file.

Video Output

Another addition to this script outputs the video to a file. I was thinking it would be helpful to have the output of the recording for future use. You may need to mess with the codecs to get the correct ones that work with your machine but it is working with x264. For some reason I cannot x.264 to a .mp4 file but am able to output to an .mkv file.

Cascade XML files

I have attached the more common xml files in a single zip file below. I found that some of the 'alt' versions work better than the standard versions. This should save you some searching. If you download and use the cascade XML files from this thread, be sure to adhere to the licensing information listed in each file.Files:

| Attachment | Size |

|---|---|

| 609.65 KB |

- Log in to post comments